Transitioning to AI Product Management: A Practical Guide with Sentiment Analysis

Hands-on strategies to integrate AI capabilities and enhance product experiences.

AI is changing the way products are built and experienced, and Product Managers (PMs) are now stepping into roles where traditional skills meet cutting-edge technology. Moving into an AI Product Manager (AI PM) role is not just about learning new concepts; it’s about understanding how to integrate AI capabilities like sentiment analysis into products that truly solve user needs.

Sentiment analysis is one of the simplest ways to explore the power of AI. It helps decode user emotions and feedback, giving valuable insights to improve customer experience. This blog will cover:

How to approach product management with an AI mindset.

A step-by-step guide to importing and using a pre-trained sentiment analysis model with Python.

Practical tips to run the code and use it for building user-focused features.

The goal is to make AI approachable for PMs and provide a hands-on way to start exploring its potential. Whether you are new to AI or already curious about how to apply it, this guide will help you take the first steps.

Thinking Like an AI Product Manager

Traditional Product Managers focus on features, user journeys, and achieving product-market fit. While these remain important, stepping into the role of an AI Product Manager means evolving your thought process to account for the unique capabilities and challenges of AI.

Shift to Outcomes: The focus moves beyond delivering features to understanding the outcomes AI enables. These might include automating repetitive tasks, making accurate predictions, or significantly enhancing user experience. Instead of asking, "What feature should we build?" the question becomes, "What value can AI create for the user?"

Data as the Foundation: AI is only as good as the data it learns from. A strong AI PM understands the lifecycle of data—what data the product generates, how to clean and organize it, and how to use it for training or fine-tuning models. Building a product roadmap now involves considering not just features but also the quality, availability, and privacy of data.

Experimentation First: AI thrives in environments that allow rapid experimentation and iteration. Testing ideas, validating models, and measuring impact become part of the workflow. Unlike traditional PMing, where features often follow a linear development path, AI capabilities demand iterative cycles to refine and deliver genuine value.

Take sentiment analysis as an example. Integrating it into a CRM application could transform how customer queries are managed. By classifying queries as positive, negative, or neutral, sentiment analysis enables personalized responses, better prioritization of support tickets, and smarter allocation of resources. This is the kind of impact that comes from thinking like an AI PM—focusing on outcomes, leveraging data, and embracing experimentation to drive meaningful results.

Understanding Sentiment Analysis in AI Products

Sentiment analysis is a natural language processing (NLP) technique used to identify the emotional tone behind a piece of text—whether it’s positive, negative, or neutral. This capability is widely applied across various domains to improve user experiences and decision-making processes. Common examples include:

Customer feedback analysis: Understanding how users feel about your product or service to prioritize improvements.

Social media monitoring: Tracking sentiment trends to measure brand perception or the success of marketing campaigns.

Prioritizing customer complaints: Quickly categorizing and addressing urgent or critical issues for better service delivery.

Why Use Pre-Trained Models?

Building and training AI models from scratch requires deep technical expertise, a significant amount of data, and computational resources. For most applications, this isn’t practical. Instead, AI Product Managers can save time and resources by leveraging pre-trained models. These models have already been trained on large datasets and fine-tuned for specific tasks, making them ready to use with minimal additional effort.

One example is Hugging Face’s distilbert-base-uncased-finetuned-sst-2-english, a model fine-tuned for sentiment analysis tasks. By using such models, AI PMs can quickly prototype, test, and deploy features like sentiment analysis without the need to build everything from scratch. This approach enables faster iteration and ensures that your focus remains on solving user problems and delivering value.

Adopting pre-trained models not only lowers the barrier to entry but also helps AI PMs gain hands-on experience with AI technologies, which is essential for making informed product decisions.

Hands-On: Using Sentiment Analysis with Python

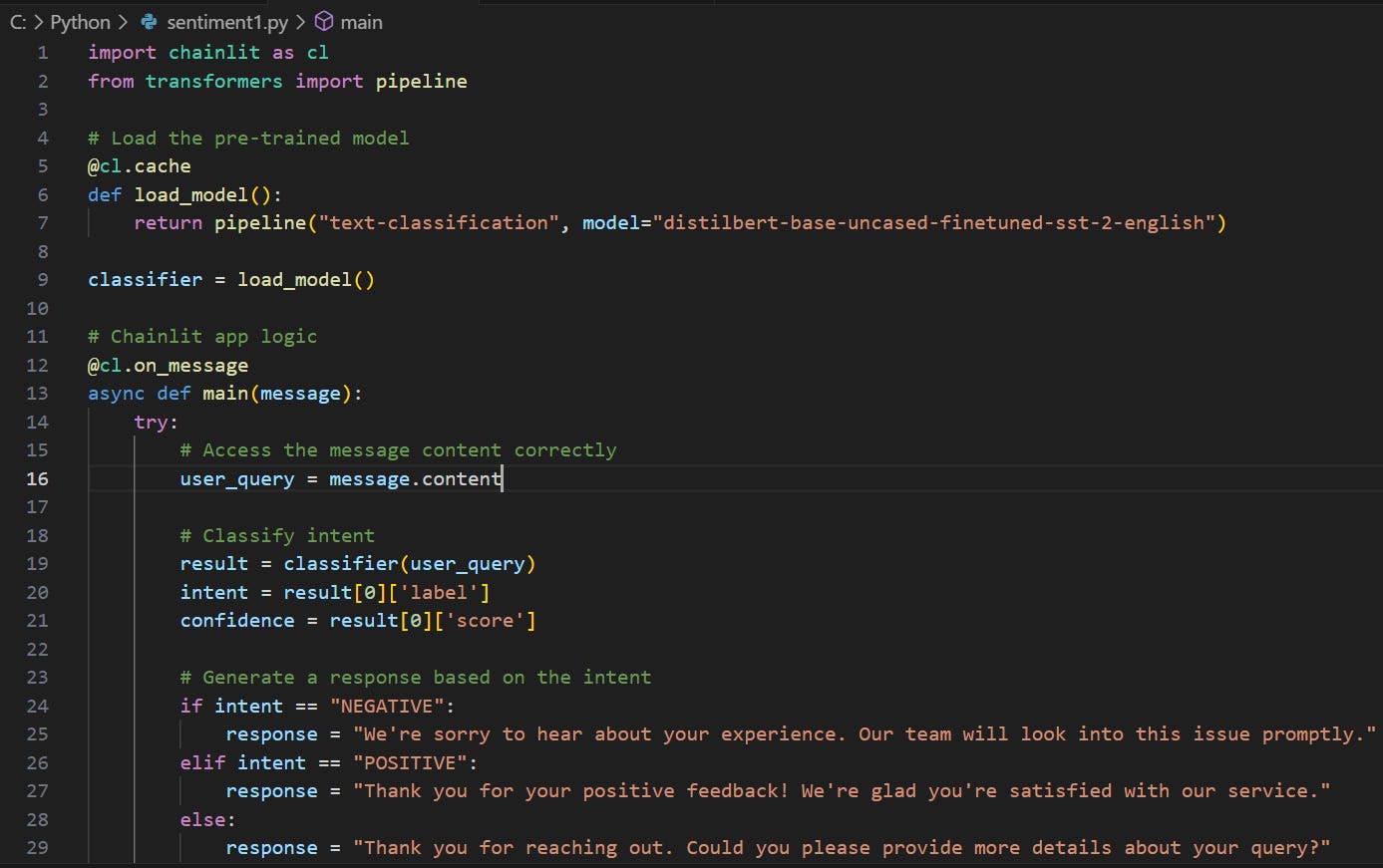

Below is an example of using Chainlit and Python to implement a sentiment analysis feature with minimal effort. This code demonstrates how to integrate a pre-trained model, classify user input, and respond dynamically based on sentiment. The workflow is straightforward, leveraging the power of Hugging Face's distilbert-base-uncased-finetuned-sst-2-english model for text classification.

The Code Breakdown

The Python code provided demonstrates how to integrate a pre-trained sentiment analysis model into a conversational AI application using Chainlit and FastAPI. This approach enables quick deployment of a sentiment analysis feature that responds dynamically based on user input. Let’s walk through the key components step by step.

1. Importing the Libraries

import chainlit as cl from transformers import pipelineChainlit: A framework designed for building conversational AI applications. It simplifies the process of creating chat interfaces that handle real-time user interactions.

Transformers: A versatile library by Hugging Face that provides pre-trained AI models for a wide range of natural language processing (NLP) tasks such as sentiment analysis, text generation, translation, and more.

These libraries form the backbone of the application, enabling you to combine conversational interfaces with advanced AI capabilities.

2. Loading the Pre-Trained Model

@cl.cache def load_model():

return pipeline("text-classification", model="distilbert-base-uncased-finetuned-sst-2-english")pipeline(): A utility from the Transformers library that simplifies the process of loading and using pre-trained models for specific tasks. In this case, we’re using a text classification pipeline, which is designed for tasks like sentiment analysis.model="distilbert-base-uncased-finetuned-sst-2-english": Specifies the pre-trained model to use. This particular model is fine-tuned for sentiment analysis, classifying text as positive or negative with high accuracy.

Why Use Caching?

The @cl.cache decorator ensures the model is loaded into memory only once during the application’s lifecycle. This avoids the overhead of reloading the model with each user interaction, significantly improving performance and reducing latency for subsequent requests.

By caching the model, the application becomes more efficient, especially when handling multiple user queries in real-time.

3. Handling User Input

@cl.on_message async def main(message):

try:

user_query = message.contentThis block is where the application interacts with the user in real time. Here's what happens:

@cl.on_message: A decorator provided by Chainlit to listen for and handle incoming messages from the chat interface. Each time a user sends a message, this function is triggered.message.content: Extracts the text content of the user’s query. This allows the application to process the user's input dynamically.user_query: Stores the user’s input for further processing, including passing it to the sentiment analysis model for classification.

By capturing the user’s input seamlessly, this block sets the stage for analyzing the query and generating a meaningful response, making it the cornerstone of the conversational flow.

4. Performing Sentiment Analysis

result = classifier(user_query)

intent = result[0]['label'] confidence = result[0]['score']Here’s what happens in this step:

classifier(user_query): The pre-trained model processes the input text provided by the user. Using the text classification pipeline, it analyzes the sentiment of the text and generates a prediction.intent: Extracts the sentiment label from the model’s output. The label indicates whether the sentiment is POSITIVE, NEGATIVE, or, in some cases, NEUTRAL.confidence: Captures the confidence score of the prediction. This is a numerical value (between 0 and 1) that represents how confident the model is about its classification.

For example, if a user types "I love the service," the model might output:

[{'label': 'POSITIVE', 'score': 0.98}]Here, the intent is POSITIVE, and the confidence score is 0.98, indicating high certainty in the result.

By extracting these values, the application is equipped to interpret user emotions and tailor responses accordingly. This step bridges the gap between raw AI output and actionable insights.

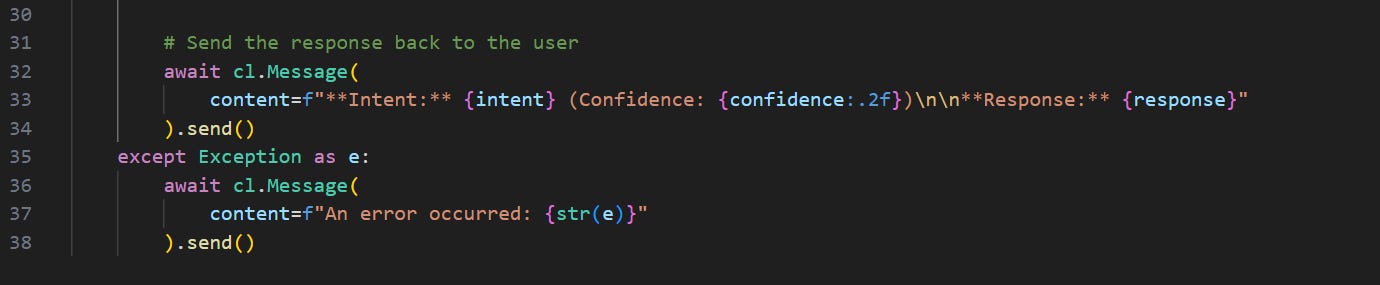

5. Generating a Response

if intent == "NEGATIVE":

response = "We're sorry to hear about your experience. Our team will look into this issue promptly."

elif intent == "POSITIVE":

response = "Thank you for your positive feedback! We're glad you're satisfied with our service."

else: response = "Thank you for reaching out. Could you please provide more details about your query?"In this step, the application uses the predicted sentiment (intent) to craft a relevant and empathetic response. Here's the breakdown:

Negative Sentiment: If the sentiment is classified as "NEGATIVE," the response acknowledges the user's dissatisfaction and assures them that the issue will be addressed. This builds trust and shows proactive support.

Positive Sentiment: For a "POSITIVE" sentiment, the application expresses gratitude and reinforces the user’s positive experience, fostering goodwill and customer satisfaction.

Neutral or Uncertain Sentiment: When the sentiment is unclear or neutral, the response politely requests more details, ensuring the conversation remains constructive and user-focused.

By tailoring responses to the user's sentiment, the application creates a more personalized and engaging experience. This dynamic interaction is key to enhancing user satisfaction and delivering value in conversational AI.

6. Sending the Response

await cl.Message( content=f"**Intent:** {intent} (Confidence: {confidence:.2f})\n\n**Response:** {response}" ).send()This step delivers the generated response to the user through the Chainlit chat interface. Here's how it works:

cl.Message(): Creates a new message object for the chat interface. Thecontentparameter is used to specify the message text.Formatted Output: The message includes the following:

Intent: Displays the predicted sentiment (e.g., POSITIVE, NEGATIVE) to provide transparency about the analysis.

Confidence: Shows the model’s confidence score (formatted to two decimal places), offering insight into the reliability of the prediction.

Response: Provides a context-specific reply based on the sentiment analysis result.

.send(): Sends the message back to the user in real-time, ensuring a seamless conversational flow.This ensures the user feels acknowledged and understood, closing the loop on the sentiment analysis process with a polished, user-friendly interaction.

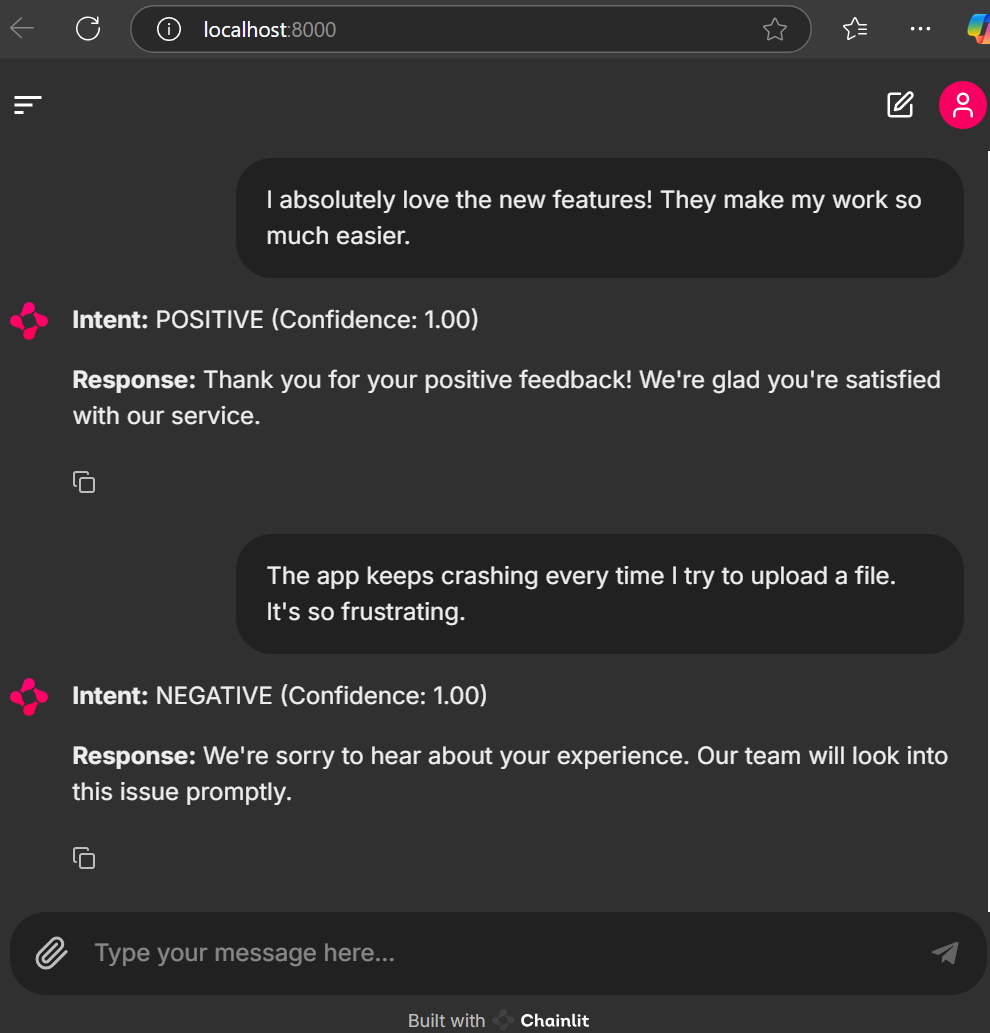

Sentiment Analysis Chat Response Using Chainlit

How to Run and Execute This Code

Setting up and running the sentiment analysis chatbot is straightforward. Follow these steps to get started:

1. Install the Dependencies

Before running the code, ensure you have the required libraries installed in your environment. Use the following command:

pip install chainlit transformersThis installs Chainlit for creating the conversational interface and Transformers for working with the pre-trained model.

2. Save the Code

Save the provided Python script into a file named sentiment.py in your working directory. This will serve as the main application file for the chatbot.

3. Run the Chainlit App

Launch the Chainlit server by running the following command in your terminal:

chainlit run sentiment.pyOnce the server starts, you’ll see a URL in your terminal output indicating where the chatbot is hosted.

4. Interact with the App

Open the URL (e.g., http://localhost:8000) in your web browser. You’ll see a clean chat interface where you can interact with the sentiment analysis application.

From Code to a Deployed AI Feature

Building a working prototype is just the beginning. To transform the sentiment analysis chatbot into a product-ready feature, the following steps can help you bridge the gap between development and deployment:

1. API Deployment

Expose the sentiment analysis model as an API to make it accessible to other applications.

Use FastAPI: Create a lightweight and efficient REST API that processes text input and returns sentiment labels and confidence scores.

Consider AWS Lambda: If you're looking for a serverless solution, AWS Lambda can host your model as a scalable function. This is ideal for minimizing infrastructure management.

Example:

The API could accept customer feedback as input and return structured data like:

{ "sentiment": "POSITIVE", "confidence": 0.98 }2. Integration with CRM

Integrate the API into your Customer Relationship Management (CRM) system to automate processes and enhance workflows.

Ticket Categorization: Automatically classify incoming tickets as positive, negative, or neutral to prioritize responses based on urgency.

Response Generation: Leverage the sentiment results to provide AI-powered, pre-drafted responses that customer service representatives can review and send.

Impact: This reduces manual effort, improves response times, and ensures consistency in customer communication.

3. Scalability

To handle real-world usage and growing demand, scalability is key.

Deploy on AWS Elastic Beanstalk: Package the application and deploy it with Elastic Beanstalk for automatic load balancing and scalability.

Containerize with Docker: Use Docker to package the application along with its dependencies. Deploy the container on Kubernetes or other orchestration platforms to scale seamlessly across multiple environments.

4. Monitoring and Optimization

Once deployed, monitoring performance is essential:

Use CloudWatch (AWS) or Prometheus/Grafana to track usage, response times, and errors.

Regularly retrain the model with updated data to improve its accuracy and relevance.

Example Use Case in Production

Imagine your CRM is receiving thousands of customer feedback entries daily. By deploying this sentiment analysis feature:

Negative tickets are flagged and prioritized for immediate action.

Positive feedback is acknowledged automatically with pre-crafted responses.

Neutral feedback is analyzed for trends, helping the product team identify areas for improvement.

Transitioning from code to a deployed feature involves technical steps but adds immense value to your product. This approach showcases the power of AI in delivering scalable, customer-focused solutions.

Insights for Emerging AI Product Managers

Simplify AI with Pre-Trained Models: Using pre-trained models like Hugging Face’s offerings streamlines development, making it easier to prototype and deploy AI features without needing extensive data science expertise.

Focus on Real-World Use Cases: Sentiment analysis isn’t just about text classification—it’s a tool to enhance customer experiences. Automate responses, prioritize critical issues, and identify feedback trends to drive actionable improvements.

Embrace Hands-On Learning: Practical exercises like this provide a solid understanding of AI workflows—from importing a pre-trained model to integrating it into a functional application. Experience is key to bridging the gap between theory and application.

Foster Collaboration with Technical Teams: AI Product Managers act as the bridge between business goals and technical solutions. Understand the technical aspects enough to communicate effectively and align AI capabilities with user needs and product strategy.

These approaches empower AI Product Managers to navigate the complexity of AI and deliver meaningful features that create value for users and businesses alike.

Transitioning into AI Product Management demands curiosity, a willingness to learn, and practical experience. Working on projects like this sentiment analysis app helps you build the confidence and skills needed to integrate AI capabilities seamlessly into your products. At its core, AI is not just about advanced technology—it’s about crafting intelligent solutions that create meaningful value for your users.

Disclaimer: The views and insights presented in this blog are derived from information sourced from various public domains on the internet and the author's research on the topic. They do not reflect any proprietary information associated with the company where the author is currently employed or has been employed in the past. The content is purely informative and intended for educational purposes, with no connection to confidential or sensitive company data.