Harnessing NVIDIA's Inference Microservices: A Strategic Guide for Product Managers on Optimizing AI Inference Workloads

Empowering Scalable, Real-Time AI Solutions with NVIDIA’s NIM Across Cloud and Edge Environments

In the world of artificial intelligence (AI), particularly with generative AI and large language models (LLMs), real-time inference is critical to delivering meaningful and responsive applications. Inference is the phase where AI models, already trained on extensive data, process new inputs to make predictions or generate responses. Inference is what powers real-time interactions in applications from customer service chatbots to medical diagnosis support. However, effective deployment of inference-based AI workloads requires robust infrastructure, highly efficient processing, and flexible, scalable architectures. Enter NVIDIA’s Inference Microservices (NIM), a solution designed to optimize and simplify the deployment of inference models, whether in the cloud or at the edge.

For product managers, understanding the advantages, applications, and deployment options for inference-based AI is essential to harnessing AI’s full potential. NVIDIA NIM offers a modular, efficient, and cost-effective approach, enabling the seamless deployment and scaling of powerful AI models across diverse environments. This article explores how NIM addresses the challenges of inference-based workloads and provides actionable insights for product managers.

Understanding Inference-Based AI Workloads and GPU Utilization

AI models require two main phases to function effectively: training and inference.

Training Phase: During training, an AI model learns patterns from a vast dataset, adjusting its parameters to minimize errors. This is a resource-intensive process typically performed offline and requires significant computational power.

Inference Phase: Inference is where the trained model applies its knowledge to make predictions on new data in real time. This phase is critical in production environments, where responsiveness can directly impact user experience and overall system performance.

When deploying large language models, the inference process consumes substantial GPU memory and processing power, as each new response is generated token by token. Each token’s generation requires the model’s weights to be loaded onto the GPU, demanding significant resources. Product managers can achieve better performance and reduced latency by optimizing GPU usage, particularly through techniques like tokenization and memory-efficient caching.

The Shift from Monolithic to Microservices Architectures in AI

Traditional software systems were often built using monolithic architectures, where all components were interconnected in a single application. While monolithic systems have a straightforward structure, they pose challenges in terms of scalability, flexibility, and maintainability. As the need for adaptability and responsiveness grew, microservices architectures emerged, breaking applications into smaller, independent services. Each service can be developed, deployed, and scaled separately, bringing multiple benefits:

Modularity: Isolated services make updates and maintenance easier and safer.

Scalability: Specific components can scale independently according to demand, optimizing resources and reducing costs.

Flexibility: Diverse technologies can be integrated, enabling faster adaptation to evolving business requirements.

This shift toward modularity has also influenced AI deployments. Instead of relying on a single, large model, AI systems are now adopting specialized models, each focused on a distinct function. NVIDIA’s Inference Microservices framework brings this microservices architecture to AI deployments, offering modular solutions that can be easily deployed and scaled based on application needs.

NVIDIA NIM: Empowering Product Managers with Scalable, Flexible AI Deployments

NVIDIA NIM enables product managers to simplify AI model deployment through containerized microservices. This allows AI models to operate as independent services that can be efficiently scaled across cloud and edge environments. The modular nature of NIM’s architecture is particularly useful in high-demand applications, as each containerized model can operate independently, enabling optimized resource utilization and greater agility.

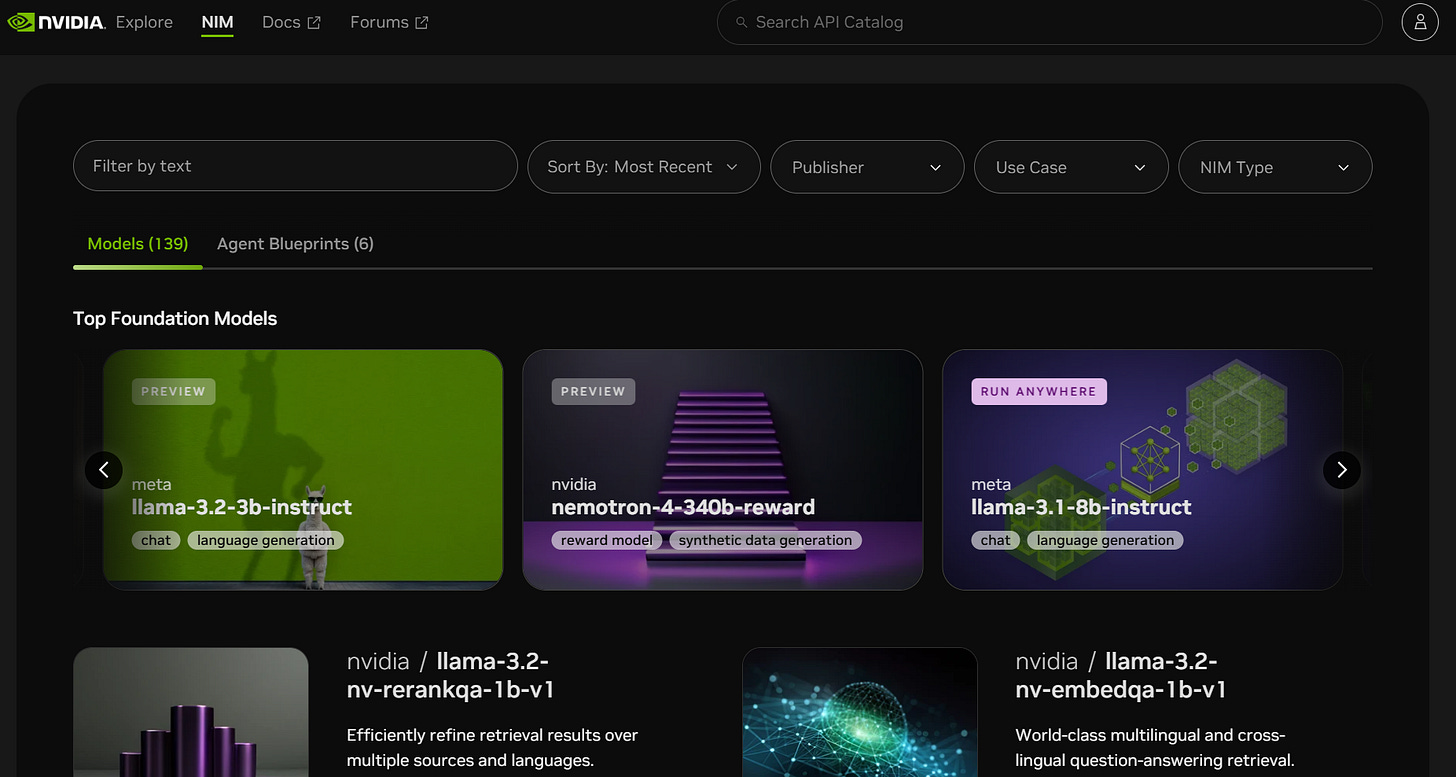

NVIDIA NIM API Catalog Page

Key Features of NVIDIA NIM

Containerized Models: NIM provides AI models pre-packaged as containers with all necessary dependencies. This allows for deployment flexibility across cloud, on-premises, and edge environments, ensuring faster time-to-market and a streamlined setup process.

Dynamic Resource Allocation with Batch Processing: NIM supports dynamic batching, enabling the efficient processing of multiple requests simultaneously. This feature is especially useful in high-traffic applications, such as recommendation engines and real-time chatbots, where optimized resource allocation is essential for maintaining low latency.

Memory Optimization with KV Cache: For applications requiring continuous interaction, like customer service bots, maintaining context between exchanges is crucial. NIM’s Key-Value (KV) cache retains processed data, allowing the model to remember context without recalculating it for each new token. This significantly reduces latency and improves the user experience by enabling faster responses.

Precision Optimization and Model Quantization: NIM includes advanced precision optimizations, such as FP8 quantization, which reduces the memory footprint of models without sacrificing accuracy. These optimizations make it possible to deploy inference-based AI on resource-limited devices, a significant benefit for real-time applications running on edge devices.

Deployment Options for NIM on Amazon SageMaker and EKS

For organizations already utilizing cloud platforms, NVIDIA NIM can be deployed on services like Amazon SageMaker and Amazon Elastic Kubernetes Service (EKS), both of which provide extensive support for scalable AI workloads.

Amazon SageMaker

Amazon SageMaker is a fully managed machine learning service that supports the building, training, and deployment of ML models. By integrating NIM with SageMaker, product managers gain access to a scalable, managed infrastructure that simplifies deployment, tracking, and resource management. SageMaker handles monitoring, resource scaling, and versioning, enabling teams to focus more on optimizing the user experience rather than managing complex infrastructure.

Amazon EKS

For teams requiring customized configurations, Amazon EKS offers a managed Kubernetes service that integrates seamlessly with NIM. Deploying NIM on EKS allows for precise control over containerized environments, which is beneficial for enterprise applications with complex requirements. Product managers can take advantage of EKS’s orchestration and scaling capabilities to manage high-demand AI workloads efficiently.

Comparison with Competitive Alternatives: AWS Bedrock, Hugging Face, and Others

While NVIDIA NIM offers an optimized framework for deploying containerized AI models with exceptional support for GPU-accelerated workloads, several other platforms provide valuable features that may suit different business needs or deployment preferences. Here’s a brief overview of some competitive alternatives and how they compare:

AWS Bedrock: Amazon’s Bedrock platform provides a range of foundation models and is fully integrated with Amazon Web Services. Bedrock excels in offering managed AI services, including popular open-source models and proprietary solutions developed by AWS. For product managers who prioritize a highly integrated, managed environment with broad access to AWS resources (like data lakes, pipelines, and security), Bedrock may be an appealing choice. However, NIM's direct optimization for NVIDIA GPUs offers performance advantages for compute-intensive, real-time applications where speed and latency are critical.

Hugging Face: Hugging Face is a widely-used platform for open-source models, including LLMs and multimodal models. With a vast model repository and community support, Hugging Face appeals to product teams looking for flexibility and an extensive range of model choices. While Hugging Face’s platform is excellent for experimentation, training, and deploying smaller or customized models, it may lack the deep GPU integration and performance optimizations that NIM offers, particularly for high-demand applications requiring extensive parallel processing.

Google Vertex AI: Google’s Vertex AI offers an end-to-end platform for deploying, managing, and scaling machine learning models, with strong integration into the Google Cloud ecosystem. Vertex AI benefits from Google’s robust infrastructure and ease of use for both training and inference workloads. However, it may not offer the same containerization flexibility and edge deployment capabilities as NVIDIA NIM, which is tailored for performance and efficient resource usage in both cloud and edge environments.

IBM Watson Machine Learning: IBM’s Watson Machine Learning platform supports a range of AI workloads and is known for its enterprise-grade capabilities and security features. It’s a strong choice for industries requiring regulatory compliance, such as healthcare and finance. However, for organizations prioritizing GPU-accelerated performance and low-latency inference at scale, NIM’s close alignment with NVIDIA hardware could offer distinct advantages.

Edge Computing and Real-World Applications of NIM

The modularity and efficiency of NVIDIA NIM make it well-suited for edge computing applications, where data is processed closer to the source. Edge AI offers several advantages over cloud-only deployments, including reduced latency, lower bandwidth consumption, and enhanced privacy.

Real-World Use Cases for Edge Deployment

Smart Home Security: By deploying NIM on edge devices, smart home systems can perform face recognition and other security tasks in real time without sending data to the cloud. This not only enhances privacy but also reduces latency, making interactions seamless and secure.

Industrial IoT and Predictive Maintenance: In manufacturing, edge-based AI can monitor equipment and alert operators to potential issues before they result in downtime. This capability is crucial in predictive maintenance, allowing companies to prevent costly equipment failures.

Healthcare Monitoring: Edge AI is revolutionizing healthcare, enabling real-time monitoring of patient data. Medical devices equipped with NIM-enabled AI models can provide instant feedback, alerting healthcare professionals to potential risks in real time, which is critical in high-stakes scenarios.

Anticipated Advancements in NVIDIA’s Edge GPU Technology

NVIDIA's commitment to advancing edge AI is evident in its development of platforms like the Jetson AGX Orin, which delivers unprecedented performance for robotics and autonomous machines. This platform provides 6x the processing power of its predecessor, enabling complex AI computations directly on edge devices. Looking ahead, NVIDIA plans to release new AI chips annually, with the next-generation Rubin platform expected in 2026. This accelerated roadmap aims to enhance AI capabilities significantly, offering more efficient and powerful solutions for edge applications.

Impact on Various Industries

The advancements in edge AI are poised to revolutionize multiple sectors:

Healthcare: Edge AI enables real-time patient monitoring and diagnostics, allowing for immediate interventions and personalized treatment plans.

Manufacturing: Predictive maintenance powered by edge AI can foresee equipment failures, reducing downtime and optimizing production efficiency.

Retail: In-store analytics can enhance customer experiences through personalized recommendations and efficient inventory management.

Transportation: Autonomous vehicles equipped with advanced edge AI can process data locally, improving safety and decision-making capabilities.

For product managers, staying abreast of these developments is crucial. Integrating cutting-edge edge AI technologies can lead to more responsive, efficient, and innovative products, providing a competitive advantage in the marketplace.

Product Management Strategy: Making Informed Choices for AI Workloads

For product managers overseeing AI deployments, selecting the right infrastructure and deployment strategy is essential. Here are key considerations and strategic steps to help guide decision-making:

Cloud vs. Edge Deployment:

Cloud Deployment: Ideal for applications requiring extensive computational resources, such as large-scale data processing or models needing frequent updates. Cloud offers virtually unlimited scaling but can introduce latency for applications with real-time needs.

Edge Deployment: Best for low-latency, real-time applications like smart devices or IoT. Deploying on the edge allows data processing closer to the source, reducing latency and bandwidth costs. Edge deployment is particularly useful in remote or bandwidth-limited environments where immediate response times are critical.

Decision Criteria: Product managers should assess the application’s latency tolerance, data sensitivity, and bandwidth constraints when deciding between cloud and edge. For applications requiring immediate processing or high data privacy, edge is preferable, while cloud is better suited for intensive, centralized data processing.

Budgeting for GPU Resources:

GPU costs are a significant part of the AI infrastructure budget. Product managers should consider demand forecasting to predict GPU usage accurately, avoiding over-provisioning while ensuring sufficient resources for peak demand.

Implementing dynamic resource allocation—a feature available in NIM—can help manage costs by adjusting resources in real time based on workload requirements, maximizing GPU utilization.

ROI Calculation: To calculate the return on investment for inference-based AI workloads, product managers can use a combination of performance metrics (such as response time improvements or user engagement metrics) and cost metrics (like GPU hours saved through dynamic batching or edge processing).

Case Study: Implementing NIM in a Customer Service Chatbot:

Scenario: Imagine a retail company, “ShopSmart,” which wants to enhance its customer service experience by deploying an AI-powered chatbot to handle real-time queries, product recommendations, and personalized support.

Strategy: ShopSmart chooses NVIDIA NIM for its deployment, leveraging both cloud and edge options. During peak shopping hours, NIM’s dynamic batching optimizes GPU usage by processing multiple queries simultaneously on Amazon SageMaker. During off-peak hours, the chatbot is run on edge devices in-store, allowing local response to customer queries, saving bandwidth, and ensuring quick responses.

Results: By combining cloud and edge deployments, ShopSmart achieves an optimal balance of responsiveness, cost efficiency, and scalability. GPU costs are managed effectively, and the chatbot provides fast, relevant responses without overwhelming infrastructure costs. Product managers at ShopSmart can monitor metrics like time-to-first-response and user satisfaction to continually optimize the service.

Model Optimization and Performance Monitoring

To maximize the effectiveness of inference-based AI, product managers should consider key performance metrics. NVIDIA NIM supports several optimization techniques, such as model quantization and precision adjustments (like FP8), to reduce memory usage and increase processing speed. These optimizations are particularly advantageous for edge devices, where computational resources are limited.

Metrics for Monitoring Inference Performance

Tracking specific metrics is essential to ensuring optimal performance. Product managers should consider:

Time-to-First-Token: Measures how quickly the model generates its first response, a key metric for user experience.

Inter-Token Latency: Evaluates the speed between generating consecutive tokens, crucial for applications where response times are critical.

Total Generation Time: Assesses the entire response duration, helping teams gauge efficiency for high-frequency applications.

These metrics provide actionable insights that help product managers refine models, monitor performance, and align with user expectations.

Leveraging Fine-Tuning and Retrieval-Augmented Generation (RAG)

For specialized applications, product managers might consider fine-tuning and Retrieval-Augmented Generation (RAG). Fine-tuning involves training a pre-trained model with additional data, enhancing its relevance for specific industry needs. Meanwhile, RAG combines retrieval-based methods with generative AI to enhance contextual accuracy by pulling information from external sources as needed.

These methods are invaluable in applications that require a high level of specificity, such as financial analysis or legal document processing. Fine-tuning and RAG allow models to generate more accurate, contextually relevant outputs, improving user satisfaction and engagement.

NVIDIA’s Model Catalog: Options for Diverse Business Needs

NVIDIA NIM’s model catalog offers a diverse selection of pre-trained models optimized for different domains, from general-purpose language models like nvidia/llama-3.1-nemotron-70b-instruct to industry-specific models for healthcare, retail, and more. Product managers can select from these ready-to-deploy models, reducing time-to-market while ensuring high-quality, contextually relevant outputs. For organizations with specific needs, this catalog provides a solid foundation that can be further customized.

A New Era in AI with NVIDIA NIM

Inference-based AI workloads are transforming real-time applications, and NVIDIA’s Inference Microservices (NIM) framework empowers product managers to deploy AI models flexibly and efficiently across cloud platforms like Amazon SageMaker and EKS, as well as in edge environments.

NIM’s features — including containerized deployment, dynamic batching, KV caching, and precision optimization — make it ideal for managing resource-intensive AI workloads. As edge computing and real-time engagement grow, leveraging NVIDIA’s GPUs and optimized inference techniques will be crucial for maintaining a competitive edge.

For product managers, mastering inference-based AI and understanding NIM’s capabilities can redefine how they deploy and manage AI solutions, reshaping user experiences and unlocking new opportunities. By exploring NIM’s features, product managers can create scalable, high-performing AI applications that meet the dynamic demands of modern consumers in an AI-driven world.

Disclaimer: The views and insights presented in this blog are derived from information sourced from various public domains on the internet and the author's research on the topic. They do not reflect any proprietary information associated with the company where the author is currently employed or has been employed in the past. The content is purely informative and intended for educational purposes, with no connection to confidential or sensitive company data.